Numerical Linear Algebra : Summary

]

Prof.Trefethen

]

Prof.Trefethen  David Bau

David Bau

Prof Lloyd N.Trefethen and his student, David Bau have neatly organized all aspects of numerical linear algebra in to a set of 40 lectures. Each lecture revolves around one specific idea. I will try to summarize the key points of these lectures.

Every person who has solved any real life math fin problem knows that the classic numerical algebra is dead. Iterative methods are almost always used for finite difference methods, solving pde’s, optimization. Books such as these , which explain things clearly and give the intuition + math behind iterative methods should be considered as a treasure. To appreciate the iterative methods, it is important to know the classic algos. In that sense 31 lectures out of 40 lectures in the book revolve around the classic way of solving stuff. If you have the patience to slog through the 31 lectures, then you will definitely see the iterative methods in a different perspective altogether.

Lecture 1 & 2 : Matrix-Vector Multiplication , Orthogonal Vectors and Matrices

The first two lectures starts off with defining matrix multiplication, Null Space, Rank, Inner Product, Outer product , Unitary Matrices etc. Just enough concepts to get going instead of swamping the reader with Gaussian elimination /pivoting etc which is the way books on Linear Algebra typically begin.

Lecture 3: Norms

Norms assume an important role in numerical linear algebra as they serve as a measure of size and distance in a vector space. One generally comes across Vector Norms in lots of places but Matrix Norms are little special as one can define them based on the norms induced by the vector subspace OR norms independent of the vector subspace, which are called General Matrix Norms. The only norm that I had known before reading this book was inner product induced norms. Not many books discuss norms at a detailed level as this book does, and more so , right at the beginning of the book. Bounds for the norm of product of two matrices are derived in this note and are very helpful in deciding the backward stability of the algos.

Lecture 4: The Singular Value Decomposition

This note introduces the basic notation of SVD. SVD which was used relatively sparsely by scientists and engineers a few decades ago, has become one of the pivotal algos in Linear algebra. SVD is motivated by a nice geometric fact - “ SVD is the image of the unit sphere under any m*n matrix is a hyper ellipse”. By using SVD you get to play a Surgeon’s role , meaning you get to see all the internals of the matrix – the four subspaces of the matrix , Row space, Column Space, Null Space, Left Null Space. There are two versions of SVD, the Reduced SVD and the Full SVD. This note discusses both these versions and the differences between them. The other fascinating aspect of SVD is that every matrix has an SVD representation. This is akin to “ Every Square matrix having a Schur Decomposition”

Lecture 5: More on the SVD

Considering that SVD is used in tons of applications, it is not surprising that there is an extra chapter on SVD. This starts off by saying that SVD helps in choosing a smart basis in such a way that the new basis reduces Ax = b to a diagonal matrix relationship.

There are three differences between SVD and EVD( Eigen value decomposition)

-

SVD gives out orthogonal basis whereas EVD need not always(except for Normal operators)

-

SVD works with 2 basis whereas EVD works with one

-

SVD exists for any matrix whereas EVD works for square matrices only

The other appealing properties of SVD are

-

With SVD , you can have two different basis for the matrix of transformation.

-

With respect to the two orthogonal basis, the matrix of the transformation is a diagonal matrix

-

Inverse of a matrix can easily be found by SVD

-

One can do an SVD on the matrix and get the maximum singular value to be the norm of the matrix where norm is induced by a norm 2 vector space

In fact most of the important theorems in linear algebra can be easily derived using SVD.

Lecture 6: Projectors

It can safely be said that Projection operator is possibly THE MOST important operator of linear algebra. Most of the statistics based problems need a projection operator. Therefore this note becomes important as all the properties of Projectors are summarized. Some of them are

-

Range( I - P ) = null( P ) where I is identity operator

-

Null( I – P ) = Range( P )

-

Projector operator is idempotent

-

If Range( P ) = S1 and Range( P ) = S2, then P is the projector onto S1 along S2.

-

An Orthogonal Projector is one that projects on to a subspace S1 along S2 , where S1 and S2 are orthogonal.

Lecture 7: QR Factorization

This lecture introduces QR factorization because it connects almost all the algos in numerical linear algebra. Sadly this is never taught at the beginning of the linear algebra courses . Existence and Uniqueness of QR factorization is proved and its usage in solving Ax = b types problem is shown. When you step back from the world of matrices and then think about QR factorization in general, it strikes that you can use this in any application to make it computationally efficient. If you want to project a function in the vector space of polynomials, you can very well do that by starting with any basis and using QR to represent the problem in orthogonal basis.

Lecture 8: Gram-Schmidt Orthogonalization

Gram-Schmidt Orthogonalization is a method of factorization that can be termed as “triangular orthogonalization”, meaning a set of triangular matrices are applied on the matrix to produce an orthogonal matrix. Classic Gram-Schmidt is numerically unstable and hence this lecture introduces modified Gram-Schmidt which is nifty trick on the original algo. Unless you go over the two algorithms very carefully, you might not notice the difference between classical and modified algo. The operation count for Gram Schmidt is about ~ 2mn^2 for a m by n matrix. This note introduces a geometrical way of computing the operation count , an idea which is very interesting.

**

Lecture 9: MATLAB

**

Three examples are provided to motivate the reader to use Matlab for visualizing matrix operations. Well , You can use any stats /math based software. The authors main intention is that linear algebra stuff is understood only when you play with matrices.

First example deals with orthogonalization of Vandermonde Matrix which gives rise to Legendre polynomials. Why are Legendre polynomials used ? Well if you can represent a function as a linear combination of Legendre polynomials, you have the advantage that components are orthogonal. This makes a lot of computations easier.

Second example is a wonderful way to illustrate machine epsilon using QR decomposition and Gram Schmidt method. It is a superb example that shows the difference between between classical Gram Schmidt algo and Modified Gram Schmidt algo. The visual that is presented with this example is something that any reader will never forget.

The last example shows the instability of different algos when a 2*2 matrix has a rank close to 1. This basically hints at the problem of ill-conditioning. Another interesting exercise mentioned in this chapter is the use of SVD for image compression. Take an image and you can use SVD to find just enough rank 1 matrices to represent the sparse data. It can be best understood if you actually create an image and apply svd to compress the data.

Lecture 10: Householder Triangularization

This note introduces Household reflector method as an improvement over QR decomposition. QR decomposition using triangularization can be improved using Householder Orthogonal triangularization If there is m*n matrix, the ops count for improved gramschmidt is 2m n^2 whereas for Householder triangularization is 2m n^2 – (2/3) n^3 .Almost all the sparse matrices use Household triangularization for decreasing the ops count

Lecture 11: Least Squares Problems

This lecture introduces the three standard methods to solve Ax = b.

-

Linear equations – flip side : numerically unstable

-

QR decomposition to tide over numerical issues – flip side : bad results with rank deficient matrices

-

SVD works well with rank deficient matrices.flip side – yet to come across !

Lecture 12: Conditioning and Condition Numbers

This note introduces the concepts of condition number. Conditioning pertains to the perturbation behaviour of a mathematical problem. Stability pertains to the perturbation behaviour of an algorithm used to solve that problem on the computer.One can work with Absolute condition number / relative condition number. Both absolute and relative condition numbers have their uses, but the latter are more important in numerical analysis. This is ultimately because the floating point arithmetic used by computers introduces relative errors rather than absolute ones;

The note then gives examples of ill-conditioned problems. Polynomial root-finding is an example of ill-conditioned problem and hence finding eigen values from the characteristic equation needs to be avoided.

The condition for Matrix-Vector multiplication is then derived . The note ends with a very important theorem that talks about the condition number for Ax=b. The essence of this theorem is that one can at least give bounds to the condition number of the problem. The bound which is the norm of ( A )times norm of (A inv) has a special name , “Condition Number of the matrix”. This is relevant to the matrix and is not to be confused with the condition number of a problem.

Lecture 13: Floating Point Arithmetic

If you have never thought about machine representation of numbers , then this note tells you all. Floating point arithmetic basically deals with the way in which computer stores numbers. Any computer programmer/ algo developer / modeller must know about this floating point arithmetic. There are very subtle issues that need to be understood. In any case, this note only deals with machine epsilon. If you are comfortable with Big O notation,IEEE standards for number representation, you can safely skip this note.

Lecture 14: Stability

This note introduces the concepts of accuracy, stability and backward stability. A stable algorithm is one which gives nearly the right answer to nearly right question. A backward stable algorithm is one that gives the right answer to nearly right question. An important theorem mentioned in this lecture is that

For problems f and algorithms f’ defined on finite-dimensional spaces X and Y, the properties of accuracy, stability, and backward stability all hold or fail to hold independently of the choice of norms in X and Y.

The above theorem allows you to use any norm for checking the stability and accuracy aspects.

**Lecture 15: More on Stability

**

This note talks about stability and condition number of the matrix. It shows that solving eigen values using characteristic equation is a bad idea as the polynomial resulting from the characteristic equation is usually ill-conditioned, meaning whatever method you use to extract the roots of the polynomial, they are going to be extremely sensitive to minor perturbations in the polynomial coefficients.

Given a problem, it is obvious that conditionality is going to affect the accuracy of the solution.Hence if one has to choose an algo or evaluate an algo, it is backward stability that matters. The condition number of A is so global a property as to be more or less invisible at the level of the individual floating point operations involved in solving Ax = b.

The basic takeaway of this note is that algo needs to be evaluated from backward stability perspective and the solution needs to be evaluated based on conditionality and backward stability perspective.

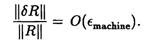

Lecture 16: Stability of Householder Triangularization

This note introduces the concept of stability using an example of an ill-conditioned matrix. If you generate a random triangular matrix, the column spaces of the matrix are going to be exceedingly ill-conditioned as a function of the entries of the matrix. This means that whatever algo you use for QR decomposition, the individual Q and R are going to show large absolute and relative errors. However the astonishing thing is that Householder gives the product of QR in such a way that QR differs from the original matrix by a very small value which is of the order (machine epsilon). This example in the note makes you realize that algo needs to be judged from backward stability . WHY? Well, the problem could be ill conditioned and hence blaming the algo for errors is not the right approach.

To reiterate, the most important aspect in linear algebra is backward stability, meaning, giving a right answer to an approximately right question. Householder Triangularization fits this requirement and hence qualifies as a backward stable algorithm. The QR thus obtained from Housholder Triangularization would be the right factorization for a perturbed A instead of A. It is stated in the note that Householder triangularization is backward stable for all matrices.

QR factorization is not an end in itself but is a means to solve the MEGA equation of linear algebra Ax = b. If the Householder factorization QR is backward stable, is it enough to make it a satisfactory piece of larger algorithm? Thankfully the answer is YES and hence the algo for calculating x in Ax = b using Householder triangularization is ALSO backward stable. Basically the point that this note makes is that , if you can solve x using an algo for an approximate A, i.e A + delta(A) where delta(A) depends on machine epsilon, then the algo is good enough to be used in various applications.

This note also mentions about SVD where one can apply SVD on an ill conditioned matrix and get a lesser forward error as compared to House Holder factorization. Though SVD does not reduce the forward error to 0 as the other component effecting the result is the conditionality of the matrix. A extremely important thing to remember, i.e conditionality and algo both effect the results. Algo can be evaluated based on backward stability.

Lecture 17: Stability of Back Substitution

This note systematically deals with all the aspects of a back substitution algorithm in proving that it is a backward stable algorithm. For a simple back substitution algo for Rx = b, it is shown that a small perturbation in R gives an exact answer for x, i.e (R+ delta(R)) x’ = b where x’ denotes the floating point representation of x.

The above bounds are derived for the R matrix. Basically we are seeing a component wise bounds rather than a weaker norm based bounds in the above proof. In the early decades of numerical analysis after the Second World War, most error estimates were obtained in the norm-wise form, whereas in more recent years there has been a shift toward component-wise analysis, since such results are sharper and algorithms that satisfy component-wise bounds are less sensitive to scaling of variables.

Lecture 18: Conditioning of Least Squares Problems

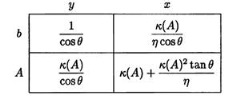

This note talks about the effect of perturbation of A and b on x and y. The following matrix summarizes the results in terms of three dimensionless parameters κ , θ , η. Kappa represents the conditioning number, theta gives an idea of the closeness of fit, and eta represents how much the projection falls short of condition number.

It is imperative to calculate these 4 numbers as soon as you see as Ax =b least squares problem before using an algo. The numbers in the matrix will give an intuitive idea about the nature of problem.

Lecture 19: Stability of Least Squares Algorithms

This note checks various algos solving Ax = b, such as Householder Triangularization, Cholesky, Modified Gram Schmidt, SVD for stability and accuracy issues. The results from various algos gives the reader an idea of relative strengths and limitations of the decomposition algorithms. The input matrix is deliberately chosen as a rank deficient Vandermonde matrix to show the performance of various algos. After going through this note, you will always tend to use SVD for any factorization for rank deficient matrices, as it is likely to be a superior over other algos. The highlight of this chapter is that it will make the reader realize that , Whenever one comes across error estimate, one needs to always gauge the individual contribution of ill-conditioning AND contribution of algo-performance. There is also a nice reasoning given for the instability of cholesky decomposition algo used to solve Ax = b.

Lecture 20: Gaussian Elimination

This note starts off by stating the various factorization in words

-

Gram-Schmidt : A = QR by Triangular Orthogonalization

-

Householder : A = QR by Orthogonal Triangularization

-

Gaussian Elimination : A = LU by Triangular Triangularization

The Gaussian elimination is one of the basic factorizations used for solving a system of equations. Basically it involves solving Ax = b by factorizing A as LU and then using forward and backward substitutions. The problem with plain vanilla LU is that it is not backward stable. Operation count is about (2m^3)/3 flops for a m by n matrix.

Lecture 21: Pivoting

This note introduces complete pivoting / partial pivoting to improve the performance of Gaussian elimination. Basically this involves factorizing PA = LU or PAQ = LU , where P and Q are row and column permutation matrices

Lecture 22: Stability of Gaussian Elimination

This note goes in to the details of the reasons behind the instability of Gaussian elimination. The note says “We shall not attempt to give a full explanation of why the matrices for which Gaussian elimination is unstable are so rare. This would not be possible, as the matter is not yet fully understood. But we shall present an outline of an explanation.” The note concludes with a positive tone saying that Gaussian elimination with partial pivoting algo is highly unstable for certain matrices of class A. For instability to occur, however, the column spaces of A must be skewed in a very special fashion, one that is exponentially rare in at least one class of random matrices. Decades of computational experience suggest that matrices whose column spaces are skewed in this fashion arise very rarely in applications. Basically you can still rely on the partial pivoting algo for most of real world applications.

Lecture 23 :Cholesky Factorization

This note talks about a matrix decomposition applied to Hermitian positive definite matrices. The algo is a variant of Gaussian elimination which exploits the symmetry present in the matrix. The operation count is (m^3 ) / 3 flops. All of the subtleties of the stability analysis of Gaussian elimination vanish for Cholesky factorization. This algorithm is always stable. Intuitively, the reason is that the factors of R can never grow large. The stability of Cholesky factorization is achieved without the need for any pivoting. Intuitively, one may observe that this is related to the fact that most of the weight of a hermitian positive definite matrix is on the diagonal.

Lecture 24: Eigenvalue Problems

The note starts off by saying that eigen value problems are particularly interesting as the algos for solving them are powerful, yet not obvious. Basic definitions of eigen value and eigen vectors are provided at the beginning of the note.Eigenvalue and Eigen vectors are useful for two reasons, one algorithmic, the other physical. Algorithmically, eigen value analysis can simplify solutions of certain problems by reducing a coupled system to a collection of scalar problems. Physically , eigen value analysis can give insight in to the behaviour of evolving systems governed by linear equations.

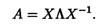

Usage of EVD produces the following form

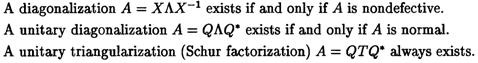

The characteristic polynomial is then defined and is used to define algebraic multiplicity.The concept of algebraic multiplicity and geometric multiplicity is introduced so as to classify matrices as defective matrices. A defective matrix is one where algebraic multiplicity of one or more eigen values is greater than the respective geometric multiplicity. What are these multiplicities? Algebraic multiplicity of an eigen value is its multiplicity as a root of the characteristic equation. Geometric multiplicity of a eigen value lambda is equal to the dimension of (A-lambda* I) ^n for a n by n matrix. The note concludes by introducing Schur Factorization, which is applicable to any square matrix.

The major takeaway from this note is as follows :

**

Lecture 25: Overview of Eigenvalue Algorithms

**

The next five lectures talk about various aspects of eigen value algorithms. I was very sceptical about going over these lectures as it was going in to a depth which was probably not required for my work. I mean eigen(A) gives all the results that you need. Why should I care about the internal workings about EVD algos ? Harbouring such feelings, I decided to speed read and see whether there is any thing that I could get from the dense lectures about eigen value decomposition.

The overview note was brilliant to say the least. It makes the brilliant connection between a polynomial equation and an EVD problem and puts forth a beautiful argument that since there is no formula that exists for expressing the roots of an arbitrary polynomial, given its coefficients, there is no closed form solution/no finite number of steps that can be programmed to find the eigen values. This means that eigen value solver must be iterative.

The goal of eigen vector solver is to produce sequences of numbers that converge rapidly towards eigen values. In this respect eigen value computations are more representative of scientific computing than solutions of linear equations. There are two phases for any eigen solver. Phase1 is to reduce the input matrix in to an upper Hessenberg matrix and in the second phase, an iteration is applied to generate a formally infinite sequence of Hessenberg matrices that converge to Triangular form. Once Triangular matrix is formed, Similar matrices argument is used to show that eigen values of the initial matrix and the triangular matrix are the same.

Lecture 26: Reduction to Hessenberg or Tridiagonal Form

This note shows , why is it a bad idea to use householder type matrices for reducing the input matrix to a upper Hessenberg matrix. Subsequently it goes in to developing the algo that actually reduces the input matrix in to upper triangular Hessenberg matrix. The next 4 lectures goes in to great depths to explain the inner workings of an EVD algo. The last lecture in this note talks about the implementation of SVD Algo.

The last part of the book deals with iterative methods which are extremely useful in solving real world problems. In the recent times, they have overshadowed the classic methods of linear algebra. In one sense, if you are going to use computers to solve something, most often than not, you will have to resort to iterative methods. I will try to post the main points of the last part of the book, “Iterative methods” , some other day.