What is a p-value anyway : Summary

Statistics stands on two pillars, estimation and inference. Pretty much anything you work on stats, you end up either estimating something or inferring something. If you take a random sample of people who have taken a stats101 course at some point in their lives and ask them what was the course all about , a most likely answer would be, “It was something to do with p-values”. Statistics at it core is about comparing a set of numbers with each other , with theoretical models and with past experience. But most of the introductory textbooks contain scary formulae and distribution tables that need to be used by students. I think if you are a teacher introducing statistics to a new batch of students, it will do a world of good, if you dramatize a specific act : Walk in to the class and tear all the pages in the appendix that have these tables and arcane formulae that only scare people out of developing a statistical mindset. It will at least drive home the point that there is no formal textbook to interpret real life data. Why do you think textbooks make assumptions about the distributions of the data ? Pause for a few seconds and think about it. Well, one of the main reasons is that unless you have some assumptions, you cannot fill up the textbook with neat formulae. Yes, think about it. Unless you assume a certain distribution, you cannot put a neat formula for estimate. You cannot put a neat formula for confidence interval and so on and so forth. What’s the use of those formulae ? Not much.

As an example, let’s say you record your evening commute times from office to home, daily for a month or two. You want to see whether the your average commute time on Monday is less than Friday.What would you do ? Well, you can calculate the mean of the commute times on both days. What you are doing is estimating the average time ? What you intended was inference , i. whether your commute time on Monday is less than Friday’s?. If you open up a stats book, it will tell you to use some formula that involves mean of the commute times,pooled standard deviation etc. From that you form a t statistic and check whether the respective p-value for the statistic is less than 0.05. The whole procedure that is mentioned in the book is brimming with a ton of assumptions ? Why should one assume same variance across two samples? Ok, if you don’t assume that, there is another formula where you can make that adjustment?. Either use this formula or that formula..argg..There is a fundamental problem with this approach.The use of parametric statistics to determine the critical value at which observed t becomes significant. In these days of abundant and cheap computing power, nobody follows such textbook approach anymore. One uses nonparametric distribution free methods for as simple a test as mentioned above. This was unthinkable a few decades ago when computing power was expensive, when software was expensive. You can generate let’s say , for the above example, 500 resampled data and get a practical answer to the question. In todays world of free open source software, it would be unthinkable if someone were to open a textbook in trying to answer the question mentioned above.

Books such as these are helpful to the general public who don’t run statistical analysis in their day to day work, but have to interpret statistical results in their professional or personal life. The book does not have a single formula but it tries to impart knowledge more than most of the boring and sleep inducing textbooks that one comes across The book tries to weave some important stats101 concepts by telling 34 stories, stories that one can easily read and remember the associated lesson with it. Stories are always a great way to teach/learn/understand stuff. Let me attempt to list the concepts that these 34 stories cover:

-

Basic difference between estimation and inference

-

In some situations mean is better than median , while in others it is vice-versa

-

For Skewed data, median and interquartile range summaries gives a better understanding of the data than mean and standard deviation

-

What is skewness and how do you identify the same in the data?

-

Mean and Standard deviation tell you everything about the data(if it comes from a normal distribution). Technically they are called sufficient statistics. Histograms do the job where mean and median are not enough.

-

Story that hints at the utility of nonparametric regression

-

Normal distribution and its parameters

-

If data doesn’t look normal, you can take a log transform and work with it. Why does log transform make the data normal in many situations ? Verbalizing it with out any formulae is where the stories do a good job.

-

How does one check whether data is from a normal distribution ?

-

What does standard error of an estimate mean ? Well, actually modern non parametric statistics has algos to figure out standard error of the standard error of an estimate. That’s a mouthful , but there are situations where it matters

-

What do you understand by a confidence interval ?

-

What is a statistical tie ?

-

How does one verbalize p-value ?

-

Story of a dry toothbrush to illustrate the basic funda of p-value

-

What does one mean by Null Hypothesis ?

-

Difference between a t test and Wilcoxon test. What are parametric statistics and non parametric statistics ?

-

Concepts such as sample size, precision , statistical power, Type I and Type II errors

-

Story to convey the usage of univariate regression, multivariate regression and logistic regression.

-

Multiple regression is not a magic wand that you can use to churn out models. It has a ton of assumptions and the more you are aware of them, the less crap you dish out/ less crap you take from the news/articles/papers that have a statistical garb.

-

When a child coughs, the mother overreacts and father underreacts ? What are the consequences ? A story that illustrates the concept of specificity and sensitivity of a test,i.e probability that the test shows positive/negative given the patient has disease/no-disease. If a doctor has to discuss a test result with a patient, specificity and sensitivity are of not much help. One is supposed to talk about probability that the patient has disease/no-disease given the test shows positive/negative, called the positive predictive value and negative predictive value.

-

How does a decision tree work ?

-

A story that analyzes the blunders one sees in academic papers that report a barrage of p-values for everything

-

Weeding out unnecessary p-values - A paper that involves testing 150 odd patients and that ends up reporting 126 separate p-values, i.e almost one p-value for a patient! Heights of lunacy

-

A story that drives home the point that chi-square and ANOVA provide inference and not estimation. Similarly correlation provides estimation and not inference. Any statistical test that provides p-values is basically an inferential procedure and one cannot use it for estimation

-

Words of caution in the context of regression

-

Explaining the idea of “Regression to the mean"using a simple story

-

Misapplication of conditional probability in O.J Simpson’s case,Sally Clark’s case

-

Dangers of multiple hypothesis testing

-

A story that reiterates that p-values are about inference and not about estimation

-

A story that talks about statistical errors creeping in because of not “starting the clock” simultaneously for test and control group

-

There is no right way of doing statistics. It is all problem,purpose, context dependent

-

Importance of reproducibility in statistics. This is a topic that is dear to my heart. May be 5-10 years back there was no good infra to do it. But now thanks to amazing efforts of R community and Python community, creating reproducible research has become easy.

-

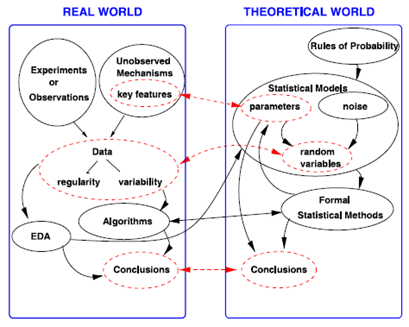

Statistics has to link math to whatever field you are working. This linkage is what makes working in stats such a fun thing. Here is a wonderful illustration that is spot on about the real world and statistical world. Universities, Schools, Textbooks focus on the right side of the picture. They teach you all the math, probability, models to equip you enough, so that you can go in to the real world, and make all the connections.

-

Statistics is about people. Even though it involves working with data, after all the results that you obtain ultimately affect people. Quoted here is the story of John snow and his statistical work that world got a handle on cholera epidemic.

Takeaway :

Takeaway :

p-value is the probability that the data would be at least as extreme as those observed if the null hypothesis were true. The author takes the reader through a set of 34 stories to explain various concepts around p-value with out using any math. Somewhere in the book he says,

Good Statistics is a bit like a pair of high quality stereo speakers. It allows you to hear the data clearly with out distortion. Yet the best speakers in the world isn’t going to make a CD sound good if the music was badly played or recorded.

Well real world data is messy and chaotic. Statistics via its high quality stereo speakers voices out all the screeches, scratches, crackles, and occasional melody. This helps in reducing Type I and Type II errors. Books such as these help in clearing out the unnecessary math / formula garb that one encounters in various Stats101 books.