An Introduction to Generalized Linear Models

[

[

This book is written by Annette J. Dobson, a Biostatistics Professor at University of Queensland(Brisbane). I had come across this book way back in May 2010 and had worked through out the book. Here is what I wrote about it back then. While trying to understand local likelihood modeling, I realized that I had forgotten some basic principles relating to diagnostics and model evaluation for GLM. Sometimes I wonder what makes things stick. May be there is no magic bullet at all. One has to keep revisiting concepts to understand and remember them.

With that mindset, I reread this book again after more than two years . My understanding of GLM models is so much better this time. I will attempt to list down my totally random thoughts from this book on my second read.

-

Understanding the connections between Chi-square distribution and Quadratic forms.

-

Useful to know the formula for general exponential family of distributions as you can compute the mean and variance quite easily of any distribution that follows this general family.

-

The exponential families include many of the most common distributions, including the normal, exponential, gamma, chi-squared, beta, Dirichlet, Bernoulli, categorical, Poisson, Wishart, Inverse Wishart etc.

-

A number of common distributions are exponential families only when certain parameters are considered fixed and known, e.g. binomial (with fixed number of trials), multinomial (with fixed number of trials), and negative binomial (with fixed number of failures). Examples of common distributions that are not exponential families are Student’s t, most mixture distributions, and even the family of uniform distributions with unknown bounds

-

Can you do a GLM modeling on any response variable from the exponential family of distributions ? No. It can be done only on canonical form ( a specific form of the exponential family of distributions)

-

Connection between link function and natural parameter of an exponential family of distributions

-

If the link function can be estimated numerically, then such a class of models are called “Generalized Additive Models” , GAM models. I have heard that they are very useful in micro-market structure studies.

-

If likelihood goes up as a Predictor value goes up – Use a logistic model , i.e log odds modeling

-

You can’t form a GLM model with the assumption of Pareto distribution for response variable. Why ? Pareto is not in a canonical form amongst the exponential family of distributions

-

Common model used for time to failure modeling is Weibull

-

qqplot() function in R that can be used to compare observed quartiles vs theoretical quartiles. Plot is between sample~theoretical quantiles

-

estimating parameter of an known distribution given Yis entails calculated log likelihood, taking the derivative of log likelihood to obtain score function. This score function is equated to 0 to get MLE estimate. One can use Newton Raphson to get this estimate. It is called “Method of Scoring”

-

If one looks at MLE for varying thetas, the score function should be sharp near MLE to get lesser variance in the MLE estimate. If the score function is kind of flat near MLE, then there could be any number of MLE estimates. In other words, MLE estimate has high variance. So, MLE estimate variance is inversely related to derivative of score function. This kind of intuition helps in understanding the relationship between MLE estimate and variance of score function.

-

Like the way Newton Raphson’s iterative equation can be linked to MLE estimate, similarly GLM estimation can also be referred to a variant of Newton Raphson. The procedure to obtain estimates is called iterated weighted least squares method

-

Using Newton Rapshon algo, generalized linear model estimates can be found. It is better to work out the model estimates on a toy dataset.

-

Learnt the procedure to compute GLM estimates using simple matrix operations and iterative procedures. This kind of working from scratch is useful knowledge as one knows what’s exactly happening behind off the shelf packages

-

While reviewing some old code, I found that I could not reconcile the standard errors from manual calculation with that from glm function. I found the bug and fixed it. The standard errors from pen and paper calculations now match with the ones from glm function.

-

The code I wrote 2 years back for some estimation problems was completely wrong. I was so dumb to code that way. Now when I see the code , I am happy that I am able to fix it with ease. What has this code review taught me, besides writing effective R code ? Well, it has taught me something about getting used to math symbols and equations. In the last two years, more than anything else I have grown more comfortable with handling the math behind the stat equations.

-

The book has an exercise problem that asks one to fit an exponential distribution for a response variable with a log link function. Big learning from R forums is that you fit it with a gamma distribution and estimate the model. You use the shape parameters in the summary(fit, dispersion=1) to get the standard errors. Nuisance parameters don’t matter for estimating coefficients in a GLM framework. They matter in computing the standard errors of the coefficients

-

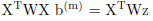

- This is the basic equation for GLM estimation. Let’s say you are fitting an exponential distribution for the response variable and you have a log link function, the R function glm has family variable that takes Gamma distribution. So how does one get the estimates for Exponential distribution that is basically Gamma with shape parameter as 1. I came across a post in R forum that finally answered my question. For estimating the coefficients of GLM, the shape parameter doesn’t matter because if you look at the equation above, the shape parameters that appears in W matrix gets cancelled from both sides. However for estimating the standard error , the matrix

needs to be used.

needs to be used.

- This is the basic equation for GLM estimation. Let’s say you are fitting an exponential distribution for the response variable and you have a log link function, the R function glm has family variable that takes Gamma distribution. So how does one get the estimates for Exponential distribution that is basically Gamma with shape parameter as 1. I came across a post in R forum that finally answered my question. For estimating the coefficients of GLM, the shape parameter doesn’t matter because if you look at the equation above, the shape parameters that appears in W matrix gets cancelled from both sides. However for estimating the standard error , the matrix

-

glm(y~1, family =whatever) gives the MLE for scale parameter for y if y belongs to an exponential family distributions with canonical form

-

You can get least square estimates by using glm(y~x, family = Gaussian). The estimates match if the model Y = Xb + error (iid with known variance)

-

Score function is a damn important function as the Variance of score function is related to the standard errors of the MLE

-

Newton Raphson’s iterative procedure framework is immensely useful in remembering the way one needs to iterate the Score function in a GLM to obtain the estimates

-

Sampling distribution of deviance statistic is used for model selection

-

Score statistic is something that is useful to compute for a likelihood estimation for a single parameter or multiple parameter case

-

One can use GLM framework to derive least square estimates . Its kind of obvious though as GLM is a superset of Simple Linear Model.

-

Deviance statistics is useful in hypothesis testing. Typically for variable that does not have nuisance parameters, Deviance statistic calculated from the sample follows a chi-square distribution.

-

In cases where there are nuisance parameters, deviance statistic in its original form cannot be computed. Hence an alternative statistic that is a function of deviance statistic is calculated so that hypothesis testing can be carried out. Classic case is that of Normal distribution based model where sigma is the nuisance parameters. Deviance cannot be directly calculated. Hence the ratio of two chi-square variables is taken and F statistic is used in Hypothesis testing.

-

Connection between standardized residuals and variance estimate in a linear regression framework

-

Deviance typically results can be approximated by a non-central chi square distribution.

-

Sampling distribution of MLE estimators

-

Sampling distribution of Score statistics

-

For a binary response variable, the link function cannot be chosen in an arbitrary way. The fact that mean value of the random variable lies between 0 and 1, means that one can use only a restricted set of link functions like

-

Cumulative Distribution

-

Inverse of Standard Normal (Probit – logit)

-

Extreme value distribution (complementary log log function)

-

-

Alternative to deviance is weighted sum of squares also called Pearson Chi-square statistic

-

Hosmer Lemeshow statistic asymptotically has a chi square distribution with dof equal to # of groups – 2. It provides a convenient form of testing hypothesis when the predictor variable is continuous and response variable takes either 0 or 1.

-

2(Likelihood of fitted – Likelihood of minimal ) is called likelihood ratio chi-squared statistic

-

Residuals for a binary response variable can be

-

Pearson Chi-Squared residual

-

Standardized Pearson or Chi-Squared residual

-

Deviance Residual

-

Standardized Deviance Residual

-

-

Important aspect of “Assessing the adequacy of Models“ is Overdispersion .Overdispersion is one of the things to test incase the current model is a bad fit

-

In a linear regression model, you can see the random component right in the equation. For a GLM it is kind of embedded as one usually sees the equation for expected value of Yi. But keep in mind that assumption of iid Y can be violated and that leads to overdispersion

-

Sum(residuals(fit)^2) gives deviance whereas Sum(residuals(fit, type=”Pearson”)^2) gives Pearson chi-square deviance

-

Deviance and Pearson Chi-squared fitness of test can be used for testing the model fitness. However Chi-Squared test is better as it performs better than Deviance in the presence of small frequencies. Why ? Small frequencies give rise to higher variance and hence the Pearson Chi-square weighs the observations according to inverse of variance. Thus all small frequencies are given lesser representation in the over all estimation procedure.

-

D(Deviance), X^2 (Chi-squared goodness of fit) , C(likelihood ratio chi-squared statistic) , pseudo R^2 are the four goodness of fit stats that are thrown as output in many statistical packages.

-

Joint probability distribution of Yjs conditional on their sum n, is a multinomial distribution. This is a key fact used in defining contingency table

-

Hierarchical models have a different meaning in the frequentist world. It means that if there is a higher-order term in the model, then all the related lower-order terms are also included

-

What comes to your mind when you hear contingency table data ? Well like any sort of data that you come across , does the data be made to fit any distribution to get a handle on it ? Using the fact that joint distribution of poisson conditional on sum is a multinomial, you now have to estimate the parameters of a multinomial.

-

Log-linear model is one where expectation of Yi can be written as offset plus a linear model term

-

Looking at contingency data, besides the usual Chi-squared statistic, what are the other models that can be fitted ? Log-linear additive model, Log-linear Saturated Model, Log-linear Minimal model ?

-

For a 2 by 2 contingency variable, the model forms for the following are stated clearly in the chapter

-

Log-linear Additive Model

-

Log-linear Saturated Model

-

Log-linear Minimal Model

-

-

One can account for overdispersion by taking an alternative model than poisson, i.e., negative binomial distribution

-

mu in poisson model is always rate specified in terms of exposure. Log-linear model has an offset term to account for this.

-

ANOVA framework can be applied to additive, saturated, minimal models.

-

Lets say you see a cross tab with data. Anything you want to analyze, you must always think in terms of null , alternate hypo. After framing the analysis in those terms, one must think about the joint distribution of the response variables. Does it come from exponential family ? Does one have to take care of over-dispersion ? What’s the link function to be used ?

I have also made a reference sheet containing various formulae mentioned in various chapter of the book.

Obviously the logical step after dwelling in the GLM world is the Generalized Additive Modeling (GAM) world. I hope to explore GAM world soon.

Reading this book is like rising above the Normal Linear Model world

&

seeing a far more broader picture of various models.