Principles of Data Mining : Review

One of the reasons for going over this book is, to shuttle between the macro and micro world of modeling. One can immerse in specific type of techniques/algos in stats, forever. But I can’t. I typically tend to take a break and go over macro aspects of modeling from time to time. Books like these give an intuitive sense of “What are the types of models that one builds? I like such books as they make me aware of inductive uncertainty associated with building models. Let me summarize the main points in the book.

Chapter 1 - Introduction

-

The main thing that differentiates Data Mining from Traditional Statistics is that the former involves working with ``observational data'' whereas the latter comprises experimental controlled data.

-

Data Mining is often seen in the broader context of Knowledge Discovery in databases (KDD).

-

DM is an interdisciplinary discipline with principles from Statistics, Machine Learning, AI, Pattern Recognition, Database technology, etc.

-

The representations sought in a data mining exercise can be categorized as ``global model'' and ``local model''. Global model, as the name suggests involves summarizing an interesting pattern relevant to the entire dataset and local model is more neighborhood specific.

-

5 broad tasks in Data Mining are

-

EDA : Interactive visualization, dimensionality reduction

-

Descriptive Modeling : Density estimation, cluster analysis, segmentation

-

Predictive Modeling: Classification and Regression

-

Discovering Patterns and Rule: Figuring out Associative Rules

-

Retrieval by Content: You have a pattern of interest and the objective is to look for similar patterns in the dataset

-

-

Components of Data Mining Algorithm

-

Model or Pattern Structure : Culling out global pattern or local pattern

-

Score Function: A function to evaluate the model fit. There can Prediction based selection functions or Criteria based selection functions. (Deviance, Likelihood function, least square, Bias vs. Variance, AIC, Mallow’s CP)

-

Optimization and Search Method: Estimating the parameters by optimizing score function.

-

Data Management Strategy.

-

-

Difference between traditional statistics and DM is that in the latter deals with very large datasets and is largely observational data. Sometimes you have the entire population in which case some of the inferential procedures learnt in Frequentist world become useless.

Chapter 3 - Visualizing and Exploring Data

-

EDA is data-driven hypothesis, i.e., you visualize the data and then form hypothesis. This is unlike the general hypo testing framework where null and alternate are driven more from general knowledge / domain specific knowledge.

-

Some of the visuals used to summarize univariate data are histograms, Kernel density estimates, Box and Whisker plots, scatter plot

-

Some of the visuals used to summarize multivariate data are contour plots, persp plots, scatterplot matrix, trellis plots etc.

-

PCA is a technique for dimensionality reduction, while Factor analysis involves specifying a model for the data. Scree plot, biplot are the visuals relevant to these type of dimensional scaling analysis

-

Solving for eigen values of a p*p matrix is O(p^2)

-

Finding covariance matrix of N*p matrix is O(N*p^2)

-

Multidimensional scaling involves representing the data in lower dimension, while at the same time preserving the actual distance between the raw data points as much as possible.

Reading this chapter brought back old memories of multivariate data analysis that I had done. Need to revisit multivariate stuff soon. It needs remedial work from me as I have been spending time on other things.

Chapter 4 - Data Analysis and Uncertainty

This chapter covers the Frequentist inference and Bayesian inference. Well, the content is just enough to get a fair idea of the principles behind them. In doing so, it touches upon the following principles/concepts/terms/ideas :

-

Difference between Probability theory and Probability Calculus

-

All forms of uncertainty is explicitly characterized by Bayesian. However to keep some objectivity Jeffrey’s priors can be used that depend on the Fischer Information

-

Importance of Marginal Density and Conditional density in various models

-

Liked the bread + cheese or bread+butter example to illustrate Conditional density

-

Simpson’s paradox – Two conditional statements cannot be aggregated in to a single conditional statement

-

Best Unbiased Estimators

-

MSE as a sum of bias squared and variance

-

Likelihood function as on object for estimation and inference

-

Sufficiency Principle

-

Bayesian estimate is a smoothened version of likelihood estimate

-

Bayesian Model Averaging

-

Credible intervals

As rightly pointed out, Bayesian inference has skyrocketed in popularity in the last decade or so, because of computing power available to everyone. Thanks to Gibbs Sampling, MCMC, and BUGS, one can do a preliminary Bayesian analysis on a desktop.

One thing this chapter made it very clear is that there is little difference between sampling with replacement and sampling without replacement in the data mining world? Why? Because of huge amount of data available, you just take a big enough sample and you get fairly good estimate of the parameters for the assumed distribution. Also the chapter says some topics from the traditional statistics discipline such as experimental design, population parameter estimate, etc., are useless in the big data world / data mining world. Instead issues like data cleaning, choosing the right kind of sampling procedure become very critical.

Chapter 5- A Systematic Overview of Data Mining Algorithms

-

Specific Data Mining Algorithmic components:

-

Data mining task, i.e. the algorithm is used to address ( Visualization, Classification, Clustering, Regression, etc.)

-

Structure (functional form) of the model or pattern we are fitting to the data (linear regression model, hierarchical clustering model, etc.)

-

Score function (Judge the quality of fit)

-

Search or Optimization method we use to search over parameters and structures

-

Data Management technique. For massive datasets, techniques for data storage and retrieval become as important as the algo being used

-

-

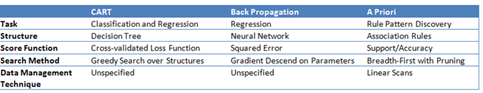

Once we identify the data mining task , then the data mining algo can be considered as a tuple

- {model structure, score function, search method, data base management}

I like this chapter as it gives an overall structure to the DM principles using five components. The first component, i.e. the task for a given problem is usually straight forward to agree upon. The next 4 components have a varying degree of importance based on the problem at hand, based on the person who is working on the problem. For a statistician, Structure of the model and Score function might dominate his thinking and efforts. For a Computer Scientist or a Machine learning expert, the Search /Optimization and DB techniques are the areas where his thinking and efforts are focused on. The relative importance of the components varies depending on the task at hand. A few pointers:

-

Smaller datasets: Structure and Score function

-

Large datasets: Search and DB management

-

Neural Networks : Structure

-

Vector space algorithm for text retrieval : Structure of the model or pattern

-

Association rules: Search and DB management

So, the component that becomes critical depends on the problem at hand.

This tuple {model structure, score function, search method, data base management} is a good way to look at things in the modeling world. However depending on the domain the relative importance of these components varies. Also these components in the tuple are not independent. They have a correlation structure so to say.

This chapter gives three examples where various components become important relative to the other and drives the modeling effort.

I have never looked at models and implementation from this perspective. I think this is by far the biggest learning from the book. It has given me a schema to think about various models and their implementation. It is also going to change the way I look at various articles and books in stats. For example the book on GLM, typically written by statisticians is going to focus more on the structure and score function. It is not going to focus on Search algos. It’s fine for toy datasets. But let’s say you want to fit GLM for some signal for a high frequency tick data. Search and DB Mgmt will become far more important and might even drive the modeling process. May be if you want to choose between Gamma link function and Gaussian link function, because of computational efficiency , you might end up choosing a Gaussian link function despite it showing a higher deviance as compared to Gamma link function.

Having a tuple structure mindset helps in moving out of silos and think broadly at the overall task at hand. If you are a stats guy, it is important to keep in mind, the search and Database management components before reading / building anything. If you are a CS guy, it is important to keep in mind the models and score functions, etc.

I think this chapter more than justifies my decision to go over this book. I have learnt a parsimonious and structured language for description, analysis and synthesis of data mining algorithms.

Chapter 6 - Models and Patterns

The chapter begins with the example of Data compression because it a useful way to think of difference between model and pattern. Lower resolution image transmission is like a model and High resolution local structure transmission is like a pattern

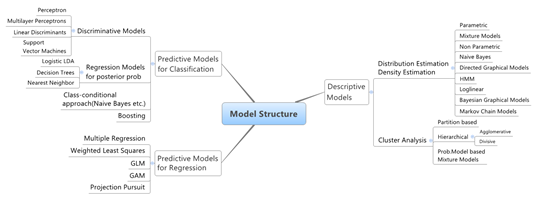

The chapter then goes on to talks systematically about various models. The following models are covered in the chapter:

-

Predictive Models for Regression

-

Linear Model( Transformation the predictor variables, transforming the response variables )

-

Generalized Linear Models

-

Local Piece wise Model Structures for Regression

-

Polynomial regression

-

Splines

-

-

Non Parametric Local Models

-

Locally weighted regression

-

Kernel Estimators

-

Nearest neighbor methods

-

-

Quasi likelihood : Relaxed assumptions on the distribution of the stochastic components

-

-

Predictive Models for Classification

-

Linear Discriminant Models where direct model is built between x and various class labels

-

Logistic Type Models : Posterior class probabilities are modeled explicitly and for prediction the maximum of these probabilities is chosen

-

Conditional Class Models – Called the generative models – Conditional distribution for a given class is modeled

-

-

Descriptive Models : Probability Distribution and Density functions

-

Parametric Models

-

Nonparametric Models

-

Mixture Models

-

Markov Chain Models

-

Graphical Models

-

Naïve Bayes Models

-

First Order Bayesian Graphical Models

-

-

Models for Structured Data

-

First Order Markov Models

-

Kth Order Markov Models

-

Hidden Markov Models

-

AR Models

-

Kalman Filters

-

Mixture of AR models

-

Each chapter ends with a “Further Reading” section that contains information about various books that an interested reader can refer. This is valuable information as it serves as an experienced guide for the data mining literature.

Chapter 7 - Score functions for Data Mining Algorithms

Scoring functions are useful to select the right model. In the regression framework, least square function can be used as a scoring function. In the case of GLM, deviance can be used as scoring function. The chapter starts off by saying that one needs to distinguish between various types of Score functions

-

Score functions for Models Vs Patterns

-

Score functions for Predictive structures Vs. Descriptive structures

-

Score functions for Fixed Complexity Vs. Different Complexity

There exists numerous Scoring function for Patterns, but none have gained popularity. This is mainly because there is a lot of subjectivity in acknowledging whether a pattern is valuable or not.

The theory behind scoring function for models is well developed and applicable to the real world.

-

Predictive Models : Least squares, Misclassification errors,

-

Descriptive Models (density estimation) : Negative Log likelihood, Integrated Squared error

-

Comparing Models with varying complexity : Cross validation , AIC, BIC, Posterior probability of each model, Adjusted R-squared , Mallow’s Cp

This type of overview of scoring functions is very valuable info. These terms crop up in various modeling techniques. For example, Cross Validation and Cp pop up in local regression, Deviance in GLM, etc. Some of these functions are empirical in nature whereas some have an asymptotic distribution.

Other takeaways from this chapter

-

Negative Log likelihood score function performs poorly in tails. If the true probability is close to 0, then log function penalizes the true probability!

-

Scoring functions for clustering algos are difficult. It is rather futile to talk of true means of clusters. So ,the score function makes sense only in the context of a question that the data miner is seeking

-

Nice way to explain over fitting : By building an overly flexible model, one follows the data too closely. Since at any given value of independent variable, the dependent variable (Y) will be randomly distributed around the mean, the flexible model is actually modeling the random component of the Y variable!!

-

Mean – Variance is a good way to think theoretically about the tradeoff. It is of little practical utility as the bias term contains true population mean , the very thing that one is trying to estimate

-

Bayesian approach to model validation is different than the frequentist methods where the model with the highest posterior probability for the given data is generally chosen. Typically the integral involved has no closed form and one has to resort to MCMC.

Chapter 8 - Search and Optimization Methods

Given a score function, it is important to find the best model and best parameters for a given model. In one sense there are two loops that need to run, first on a set of models and second inner loop on the parameters of each model.

Parameter spaces can be discrete or continuous or mixed. The chapter starts off with general search methods for situations where there is no notion of continuity in the model space or parameter space being searched. This section includes discussion of the combinatorial problems that typically prevent exhaustive examination of all solutions.

Typically if one is coming from Stats background, model fitting usually involved starting with a null model or a saturated model and then comparing the model of interest with them. If there are p parameters to be fitted there can be 2^p model evaluations. However this approach becomes extremely cumbersome in data mining problems. Although mathematically correct, this viewpoint is often not the most useful way to think about the problem, since it can obscure important structural information about the models under consideration.

Since score function is not a smooth function in the Model space, many traditional optimization techniques are out. There are some situations where the optimization can be feasible, i.e. when score function is decomposable, when the model is somewhat linear or quasi-linear so that inner loop of finding the best parameter for a model is not computationally expensive. So obviously with computational complexity, the best way out is heuristic based search. The section then gives an overview of state-space method, Greedy Search method, Systematic Search methods (breadth-first, depth-first, branch-and-bound).

If the score function happens to be continuous, then the math and optimization is pleasing as you can use calculus to get estimates. As an aside, the score function here should not be confused with the score function in MLE context, where score function is the first derivative of the log likelihood with respect to the parameters. The section starts off with describing simple Newton Raphson method and then shows the parameter estimation for univariate and multivariate case. Gradient descent methods, Momentum based methods, Bracketing methods, back propagation methods, iterated weighted least squares, simplex are some of the algos discussed. The chapter then talks about Constrained Optimization, where there is linear/nonlinear score function and linear/nonlinear constraints.

EM Algo is covered in detail. If you are handling financial data, this is a useful technique to have in your toolbox. The algo is a clever extension of likelihood function to depend on hidden variable. Thus the likelihood function has true parameters as well as hidden variables. An iteration between Expectation and Maximization algorithm does the job. Probably the clearest explanation of this algo is in Yudi Pawitan’s book, In all Likelihood.

The chapter ends with stochastic search and optimization techniques. The basic problem with nonstochastic search is that the solution is dependent on the initial point. It might get stuck at the local minimum. Hence methods such genetic algos and simulated annealing can be used so as to avoid seeking local minimas.

This chapter has made me think about the fact that I have not implemented some of the algos mentioned in the book at least on a toy dataset. I should somehow find time and work on this aspect

Chapter 9 - Descriptive Modeling

-

Model is global in the sense that it applies to all the points in the measurement space. Pattern is a local description, applying to some subset of the measurement space. The former can be termed as a summary or descriptive model. The latter can be termed as predictive model, where one is interested in knowing the future values of a specific set of observations

-

Descriptive Models, as the name goes try to make a mathematical statement about the entire dataset. What sort of mathematical statements? Well, they can be probability density functions, joint distribution functions, class labeling etc.

-

Score functions relevant to Descriptive Models : MLE Score function, BIC Score function, validation log likelihood

-

Various Models for Probability distributions

-

Parametric density models

-

Mixture distribution and densities (EM Algo for mixed models)

-

Non Parametric density models

-

Histograms

-

Kernel density estimators

-

-

Joint distribution of Categorical data

-

Chi-Square

-

Multinomial models

-

Loglog models

-

Acyclic directed graphical models

-

-

-

Cluster Analysis

-

Partition-Based Clustering

-

Hierarchical Clustering

-

Agglomerative

-

Divisive

-

-

Probabilistic Model-Based Clustering Using Mixture Models(EM is one of the algos used)

-

-

For Density estimation, techniques based on cross-validation can be useful but are typically computationally expensive. Visualization is better

-

Kernel methods are useful only in low dimensional spaces

-

Joint distribution estimation becomes a daunting problem as dimensionality goes up. You have to no choice but to impose structure. Either assumes that the variables are independent or conditionally independent and then build a model around it.

-

The chapter makes a reader very obvious that some basic understanding of graph theory is a must if you want to model conditional independence models

-

Family of Log-linear models is a generalization of acyclic directed graphical models. If you learn linear regression and then graduate it to GLM, you see a much broader picture and your understanding of linear regression improves a lot. In the same way graphical models improve your understanding of log-linear models

-

Validity of clustering algorithms is difficult to check.

-

Score functions for partition-based clustering algos are usually a function of incluster variation and between cluster variation

-

One of the basic Algos for partition-based clustering is K means

-

Agglomerative methods provide a clustering framework for objects that cannot be easily summarized as vector measurements.

-

Probabilistic based clustering can be implemented using EM algo.

-

K means sometimes is better than mixture models because mixture models may give unimodal structures.

Chapter 10 - Predictive Modeling for Classification

Having a tuple [model or pattern, score function, search and optimization, database] view point is very important while building and testing models. Off the shelf softwares have only a specific combination of the tuple built in to them. Even in the data mining literature, certain combos of model-score function-optimization algos have become famous. This does not mean that they are the only ones . At the same time, it is not prudent to experiment with all combinations of the tuple.

-

Predictive modeling can be thought of as learning a mapping from an input set of measurements x to a scalar y. The mapping is typically a function of covariates and parameter. If y is categorical then the prediction methods fall under classification. If y is continuous then they fall under regression.

-

Supervised classification is to model the way the classes have been assigned. Clustering or Non Supervised classification means defining the class labels in the first place

-

Probabilistic model for classification : Assume a prior distribution for classes. Assume a distribution of data conditional on the class. Use Bayes to update the priors

-

Discriminative approach : Directly model input x to one of the m class labels

-

Regression approach : Posterior class probabilities are modeled explicitly and for prediction the maximum of these probabilities is chosen ( example logistic models)

-

Class-Conditional approach : It is called the generative model as one is trying to figure out the probability of input given a specific class. Basically you assume k classes, k prior probabilities, for each of the k classes you build whatever model you feel is the right model, use bayes to update priors

-

-

Discriminative and Regression approach are referred to as diagnostic methods whereas class conditional approaches are called sampling methods

-

If you are given input vector x and class labels c, you can directly model dependency between x and c (Discriminative approach), or class probabilities can be modeled(Regression approach), or model conditional class probabilities ( Class-Conditional approach)

-

The number of parameters to be fitted is maximum for Class-Conditional approach. Next comes Regression approach that requires lesser parameters. Next comes discriminative approach that requires even lesser parameters

-

Linear Discriminants : Search for the linear combination of two variables that best separates the classes. You find a straight line in such a way that if you reorient the data so that one of the axis is that line, then you see that class means are separated to the max

-

Tree Methods

-

Nearest neighbor methods belong to the class of regression methods where one directly estimates posterior probabilities of class membership

-

Naïve Bayes classifier is explained very well

-

Evaluating and comparing classifiers: Leave one out, cross validation, jack knife etc.

Chapter 11 - Predictive Modeling for Regression

The basic models are linear, generalized linear and other types of extended models.

-

The models that fall in this chapter are the ones where the dependent variable is an ordinal/interval/ratio variable

-

Models mentioned are

-

Simple Linear Regression

-

Multiple Linear Regression

-

Generalized Linear Models

-

Generalized Additive Models

-

Projection Pursuit Models

-

-

Verbalizing GLM – Estimation of parameters involves a transformation of response variable , taking heteroscedasticity to generate a weighted least square equation and iterating over the equation to get estimates.

-

Verbalizing GAM – Same of GLM with one tweak. Instead of combining the covariates in a linear fashion, the link function is a non parametric combination of covariates. Basically take a local linear regression model and generalize it.

Here is a map of models mentioned in the book :

Takeaway:

Takeaway:

Through out the book, the tuple structure {model structure, score function, search method, data base management} is used to structure a reader’s thought process. Using this structure, the book gives a bird’s overview of all possible models that can be built in the data mining world. Awareness of these possibilities will equip a modeler to take inductive uncertainty in to their modeling efforts.