Matrix Algebra Useful for Statistics : Review

Matrices are everywhere in statistics. Every linear model involves some kind of a generalized inverse calculation of the design matrix. Statisticians, data analysts etc. are always dealing with datasets that might have repeated measurements, repeated observations, noise etc.

The matrix is never full row rank matrix. This means that the traditional inverse applicable for a square matrix has to give way to something less restrictive and hence a generalized inverse  is needed. [

is needed. [![clip_image001[1] clip_image001[1]](images/clip_image0011_thumb.gif) is not unique. If you find one, you can manufacture a ton of other solutions to the set of equations[

is not unique. If you find one, you can manufacture a ton of other solutions to the set of equations[ . In some of the texts on linear algebra course this is often introduced as Pseudo inverse [

. In some of the texts on linear algebra course this is often introduced as Pseudo inverse [ , the name implying that it is not exactly an inverse. The generalized inverse arises from a matrix that satisfies the first of the four Moore-Penrose conditions.

, the name implying that it is not exactly an inverse. The generalized inverse arises from a matrix that satisfies the first of the four Moore-Penrose conditions.

One can compute an inverse of a square matrix in a number of ways. However computing ![clip_image001[2] clip_image001[2]](images/clip_image0012_thumb.gif) is tricky. One procedure that I knew before reading this book is the SVD. Factorize the matrix using singular value decomposition and compute the pseudo inverse. This book gives a nice algorithm to compute G that one can use it to work out for smaller dimensional matrices and get a feel of the various solutions possible. Why work on smaller dimensional matrices to compute [

is tricky. One procedure that I knew before reading this book is the SVD. Factorize the matrix using singular value decomposition and compute the pseudo inverse. This book gives a nice algorithm to compute G that one can use it to work out for smaller dimensional matrices and get a feel of the various solutions possible. Why work on smaller dimensional matrices to compute [![clip_image001[3] clip_image001[3]](images/clip_image0013_thumb.gif) ? Well, as they say we do not learn to solve quadratic equations by working with something like [

? Well, as they say we do not learn to solve quadratic equations by working with something like [ just because it occurs in real life. Learning to first solve [

just because it occurs in real life. Learning to first solve [ is far more instructive. Similarly working with a tractable algorithm that computes [

is far more instructive. Similarly working with a tractable algorithm that computes [![clip_image001[4] clip_image001[4]](images/clip_image0014_thumb.gif) gives the understanding to use more sophisticated algos. Multiple regression model estimation depends on the normal equations [

gives the understanding to use more sophisticated algos. Multiple regression model estimation depends on the normal equations [ .To solve the set of equations, one needs to know the generalized inverse of [

.To solve the set of equations, one needs to know the generalized inverse of [ Ok, enough about [

Ok, enough about [![clip_image001[3] clip_image001[3]](images/clip_image0013_thumb1.gif) as this book is not about a specific matrix. The book focuses on matrix theory and not on computations. So, all you need to work through this book is pen and paper.

as this book is not about a specific matrix. The book focuses on matrix theory and not on computations. So, all you need to work through this book is pen and paper.

The book was published in 1982 and here is the blurb about the author from wiki

Shayle Robert Searle Ph.D. (April 26, 1928 - February 18, 2013) was a New Zealand mathematician who was Professor Emeritus of Biological Statistics at Cornell University. He was a leader in the field of linear and mixed models in statistics, and published widely on the topics of linear models, mixed models, and variance component estimation. Searle was one of the first statisticians to use matrix algebra in statistical methodology, and was an early proponent of the use of applied statistical techniques in animal breeding.

The author does not talk about statistics right away in the book. The book has about 350 pages of theory before any mention statistical applications. For those looking for some crash course kind of thing in linear algebra to statistics, this book is no-no. I was in that category few years ago and slowly I realized that it is not satisfying to have shallow knowledge. Well, may be nobody in the world cares if you know the derivation of the distribution of a quadratic form. However spending time understanding such things gives a nice sense of comforting feeling that is hard to express in words. For me, there is some sense of satisfaction once I understand stuff at a certain depth. For example, I saw the ANOVA output much before I could understand that exactly going behind it. In a typical undergrad course or even in some grad courses, that’s how things are introduced and explained. At least that’s what happened in my case. But much later in life I learnt that each line of ANOVA output is nothing but the projection on to orthogonal components of the predictor subspace and the lines are a fancy way of representing a version of Pythagorean Theorem. Well, that’s not exactly the point of ANOVA though. Splitting the norm square in to orthogonal components is not useful for inference unless one makes a few assumptions on the distribution and then the whole machinery rocks.

The author mentions in the preface that the only prerequisite to this book is high school algebra. Indeed after reading the book I think he is spot on. He uses differentiation at a few places but those pages are prefixed with author’s words that it’s ok to skip those pages. This is one of those rare books that take the reader from explaining basic stuff like “what the matrix looks like” to using it in a “statistical inference” of a linear model.

Many years ago I was told that Axler’s book on Linear Algebra is the one that needs to be read to get a good understanding. I followed the advice and realized that time that every matrix is nothing but a linear transformation. There is an input basis, there is an output basis and a transformation is represented by the matrix. However books on statistics use special matrices and operators. You need to connect ideas in matrix theory and statistics to understand stats well. In that sense this book is an awesome effort. In 12 chapters out of the 15 chapters, the author explains the various concepts that are used in stats. When a reader reaches last three chapters, more often than not, he/she is thirsting for more and I guess that’s a sign of a great book. Some of the equations you had always known appear to twinkle and shine brightly.

The book starts with a basic introduction and talks about the need for working with matrices. If you think about it, whatever field that you are working in, using matrices, you can state the problem independent of the size of the problem. It is also useful to organize computer techniques to execute those methods and to present the results. The chapter is basically an intro to the notations used throughout the book.

The second chapter gives a list of basic matrix operations. In doing so, the author introduces partitioning, transposing, inner product, outer product etc. The reader is encouraged to view matrix multiplication as a linear combination of rows and linear combination of columns. When you see AB, the product matrix, the first thing that I guess that should come to one’s mind is that rows of the AB are linear combination of rows of B and columns of AB are linear combination of the columns of A. Similarly premultiplying a matrix by a diagonal matrix is equivalent to scaling each of the rows by the diagonal entries. Post multiplying means scaling each column. This view point is crucial for understanding various canonical forms of a matrix. By using elementary row operations and column operations, you can transform a matrix in to a bare bone structure that is very powerful. If the operations are chosen well, this structure is a diagonal matrix from which one can cull a ton of information. I think if you work with R for some time, this kind of thinking becomes natural as every line of code you write in R, you are either using vectorized functions or building vectorized code.

The third chapter deals with special matrices, special because of their properties and also because of their appearance in statistics. Well, what are they?

-

Symmetric matrices: Covariance matrix, Correlation matrix,

![clip_image001[12] clip_image001[12]](images/clip_image00112_thumb.gif) , [

, [![clip_image002[4] clip_image002[4]](images/clip_image0024_thumb.gif) are all the standard mathematical objects in statistics and these are all symmetric matrices.

are all the standard mathematical objects in statistics and these are all symmetric matrices. -

All elements equal: Denoted by

![clip_image003[4] clip_image003[4]](images/clip_image0034_thumb.gif) this matrix is crucial for various operations like centering the data. If you have used the sweep function in R, the background of such an operation typically involves this matrix.

this matrix is crucial for various operations like centering the data. If you have used the sweep function in R, the background of such an operation typically involves this matrix. -

Idempotent matrices: In stats, one comes across them in various places. If you project dependent data vector on to the subspace orthogonal to input vectors, you get a projection matrix that is idempotent. I think this is a standard place where one comes across idempotent matrix. Another place where it appears is in quadratic forms. Every line of output in ANOVA is attached a distribution. This is done by computing the distribution of the quadratic form and idempotent matrices are crucial in proving many aspects.

-

Orthogonal matrices - Well, a data analyst should make long lasting friendship with these matrices. In a world filled with noise, these friends help you see the data in the right way. Principal component analysis, Factor analysis, ridge regression, lasso, LARS all use orthogonal matrices in one form or the other. They are everywhere. Helmert, Givens transformation and Householder matrices are some of the special orthogonal matrices mentioned in the book.

-

Positive definite and Positive semi definite: If you have gone through the book by Gilbert Strang on Linear algebra, he makes it a point to highlight these matrices everywhere. Well rightly so, they do appear everywhere in statistics. Depending on the nature of the matrix, the scalar multiplication can be thought of as a quadratic or bilinear form.

The fourth chapter is on determinants. It is not so much about the computation of determinant that matters. Since Gaussian multivariate distribution, the most common distribution that one sees, has a determinant in its functional form, it is better to know some of the basic properties. More importantly one must quickly be able to verbalize the intuition behind a few things like, a determinant of an orthogonal matrix is either 1 or -1, and the determinant of idempotent matrix is either 0 or 1. Understanding the computation determinant from a cofactor viewpoint helps one connect the ideas with eigen value decomposition. i.e. Sum of eigen values is the trace of a matrix and Product of eigen values is the determinant. The fifth chapter deals with computing inverse using determinant and cofactor method.

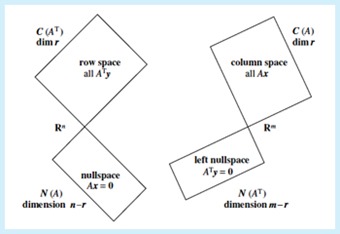

The sixth chapter of the book is on “Rank'” of a matrix, a concept that is a defining characteristic of a matrix. Terms such as column rank, full column rank, row rank, full row rank, and full rank are introduced with a few examples. The idea of rank is connected with the idea of linear dependence between rows or columns of a matrix. I think Strang’s visual of four subspaces is wonderful in understanding the concept of rank. If there is a vector that gets transformed to a null space, then the matrix is a deficient matrix, i.e. column rank is not equal to row rank. No amount of theorems and lemmas can give the same effect as that of the “four subspaces visual”.

I think the highlight of this chapter is to show the full rank factorization of a matrix. This is also probably the first signs of the author hinting at the power of partitioning a matrix.

The seventh chapter is on canonical forms. There are various canonical forms mentioned in the chapter. All of them are fancy names for transforming the matrix in to a diagonal form by premultiplying and postmultiplying by elementary matrices. The first canonical form mentioned is the equivalent canonical form. This exists for every matrix. Take any matrix, apply relevant operations to its rows and columns, you can manufacture a diagonal matrix. This diagonal matrix is very special as it immediately tells you the rank of the matrix.

![clip_image005[4] clip_image005[4]](images/clip_image0054_thumb.gif)

Why is this canonical form useful? Well, firstly it will help one compare the rank amongst matrices. Secondly this form readily tells us whether the matrix is full column rank or full row rank or full rank matrices. All these are crucial for the existence of right/left inverses for the matrix. For symmetric matrices, there is another canonical form that goes by the name congruent canonical form. There is also a canonical form under similarity that comes up in the chapter on eigen values. I think the highlight of this chapter is the proof that a matrix of rank r can always be factored in two matrices one with full column rank and one with full row rank.

The eighth chapter is on generalized inverses. Well, I have said enough about it at the very beginning of this post. All I can say is that this chapter gives you a firm grounding on generalized inverses.

The ninth chapter is on solving linear equations. The first concept introduced in the chapter is that of consistency. Any relationship in the LHS of a linear equation must be present in the RHS. Else, the equations are inconsistent. This chapter is one of the crucial chapters in the book that talks about the usage of generalized inverse to solve a set of linear equations. Once you find a generalized solution, you can manufacture any number of solutions based on the specific solution. So, the solution to the consistent equations,![clip_image006[4] clip_image006[4]](images/clip_image0064_thumb.gif) , with [

, with [![clip_image007[4] clip_image007[4]](images/clip_image0074_thumb.gif) having [

having [ columns and [

columns and [ being a generalized inverse of [

being a generalized inverse of [![clip_image007[5] clip_image007[5]](images/clip_image0075_thumb.gif) , is given by

, is given by

for any arbitrary vector  of order [

of order [![clip_image008[1] clip_image008[1]](images/clip_image0081_thumb.gif) . This means that you can write down the solution to [

. This means that you can write down the solution to [ as

as

for any arbitrary vector ![clip_image011[1] clip_image011[1]](images/clip_image0111_thumb.gif) of order [

of order [![clip_image008[2] clip_image008[2]](images/clip_image0082_thumb.gif) . From here on, the book touches upon so many ideas that are so crucial in understanding a linear model.

. From here on, the book touches upon so many ideas that are so crucial in understanding a linear model.

The tenth chapter is on partitioning. If you have been told that conditional density in a multivariate normal distribution is also a multivariate normal and you wonder why, then partitioning is one way to understand it. As an aside, if you are wondering what’s the big deal in partitioning a matrix, I think you can try this: Derive the conditional density of the variables  given [

given [ in the case where all the four variables are from a multivariate normal [

in the case where all the four variables are from a multivariate normal [ If you can derive with ease, then I think you are terrific. But if you are like me, rusty with such a derivation, then you might want to pay attention to partition matrices. Where do partition matrices help? Firstly, if you can spot orthogonal matrices in a big matrix, you are better off partitioning it to exploit the orthogonality features. Computing determinants is quicker once you partition. I think the biggest learning from me in this chapter was the way to look at inverse of a matrix. A matrix inversion formula using partitioned matrices is very useful in whole lot of places. These matrices inversion formulas are written using Schur complements. The chapter ends with a discussion on direct sum and product sum operators on a matrix. Well, kronecker delta is the fancy name for product sum. As an aside I have always replicated matrices as blocks in a sparse matrix to aid quick calculations with R. This replication makes a lot of sense when I realize that it is basically a product sum operator on the design matrix.

If you can derive with ease, then I think you are terrific. But if you are like me, rusty with such a derivation, then you might want to pay attention to partition matrices. Where do partition matrices help? Firstly, if you can spot orthogonal matrices in a big matrix, you are better off partitioning it to exploit the orthogonality features. Computing determinants is quicker once you partition. I think the biggest learning from me in this chapter was the way to look at inverse of a matrix. A matrix inversion formula using partitioned matrices is very useful in whole lot of places. These matrices inversion formulas are written using Schur complements. The chapter ends with a discussion on direct sum and product sum operators on a matrix. Well, kronecker delta is the fancy name for product sum. As an aside I have always replicated matrices as blocks in a sparse matrix to aid quick calculations with R. This replication makes a lot of sense when I realize that it is basically a product sum operator on the design matrix.

The next chapter in the book is on eigen values and eigen vectors. These are special vectors of a matrix that give rise to invariant subspaces. In goes a vector, out comes a vector that is merely scaled version of the vector, i.e. the transformation gives rise to an invariant subspace. The chapter gives some examples to show the computation of eigen vectors and eigen values. The highlight of the chapter is the diagonability theorem that gives the conditions for a matrix to be represented in the form  . For a symmetric matrix, the eigen values are all real, eigen vectors are orthogonal, and they can be represented in a form, that goes by the name, canonical form under orthogonal similarity

. For a symmetric matrix, the eigen values are all real, eigen vectors are orthogonal, and they can be represented in a form, that goes by the name, canonical form under orthogonal similarity

The twelfth chapter deals with a set of miscellaneous topics that are all so important. In one sense by this point a reader is overwhelmed by the beauty of this subject. There are so many key ideas connecting linear algebra and statistics mentioned here. This chapter gives a brief summary of orthogonal matrices, idempotent matrices, the important matrix  , summary of canonical forms, differential forms of various vector operators, vec and vech operators, Jacobian matrix, Hessian matrix etc.

, summary of canonical forms, differential forms of various vector operators, vec and vech operators, Jacobian matrix, Hessian matrix etc.

After 350 pages of the book, the author starts talking about applications in statistics. So, the reader’s effort in going through the first 12 chapters is rewarded in the last 80 pages of the book. Chapter 13 explains about variance covariance matrix, correlation matrices, centering the matrix, Wishart matrix, multivariate normal distribution and quadratic forms. The last two chapters rip apart the linear model and build everything from scratch using the concepts from introduced in the book.