Mindless Statistics

The paper titled, Mindless Statistics, by Gerd Gigerenzer, makes a case for banishing the mindless “null ritual” from statistics. In this blog post, I will summarize the main points of the paper.

The author starts off by emphasizing the importance of developing a statistical toolbox. Indeed statistics is a rich subject that can be enjoyed by thinking through a given problem and applying the right kind of tools to get a deeper understanding of the problem. One should approach statistics with a bike mechanic mindset. A bike mechanic is not addicted to one tool. He constantly keeps shuffling his tool box by adding new tools or cleaning up old tools or throwing away useless tools etc. Far from this mindset, the statistics education system imparts a formula oriented thinking amongst many students. Instead of developing a statistical or probabilistic thinking in a student, most of the courses focus on a few formulae and teach them null hypothesis testing.

What is the Null Ritual ?

The author says Null Ritual has three steps :

-

Set up a statistical null hypothesis of “no mean difference” or “zero correlation”. Don’t specify the predictions of your research hypothesis or of any alternative substantive hypotheses.

-

Use 5% as a convention for rejecting the null. If significant, accept your research hypothesis. Report the result as p < 0.05, p < 0.01, or p < 0.001 (whichever comes next to the obtained p-value).

-

Always perform this procedure.

This ritual has been institutionalized in curricula, editorials, and professional associations. In many of the statistics texts, there is virtually no mention of any history behind this ritual. It is often presented as a procedure with no names behind it. It is almost never mentioned that it was Fisher’s idea and Neyman and Pearson argued against null hypothesis.

Fisher is mostly blamed for the null ritual. But towards the end of his life, Fisher rejected each of three steps :

-

Null does not refer to a nil mean difference or 0 correlation, but to any hypothesis that can be nullified

-

Fisher thought that using a routine 5% level of significance indicated lack of statistical sophistication

-

Null hypothesis testing was the most primitive type of statistical analyses and should be used only for problems about which we have no or very little knowledge

Neyman and Pearson would have also rejected the null ritual, but for different reasons. They rejected null hypothesis testing, and favored competitive testing between two or more statistical hypotheses. In their theory, “hypotheses” is in the plural, enabling researchers to determine the Type-II error. The paper says that the confusion between the null ritual and Fisher’s theory, and sometimes even Neyman-Pearson theory, is the rule rather than the exception among psychologists.

If null ritual is not appropriate, how did it spread in the first place? Its origin is in the minds of statistical textbook writers in psychology, education, and other social sciences. It was created as an inconsistent hybrid of two competing theories: Fisher’s null hypothesis testing and Neyman and Pearson’s decision theory.

What Fisher and Neyman–Pearson actually proposed?

Fisher’s null hypothesis testing:

-

Set up a statistical null hypothesis. The null need not be a nil hypothesis (i.e., zero difference).

-

Report the exact level of significance (e.g., p = 0.051 or p = 0.049). Do not use a conventional 5% level, and do not talk about accepting or rejecting hypotheses.

-

Use this procedure only if you know very little about the problem at hand.

Note that there is no alternate hypothesis in Fischer’s framework. As a consequence, the concepts of statistical power, Type-II error rates, and theoretical effect sizes have no place in Fisher’s framework

Neyman–Pearson decision theory:

-

Set up two statistical hypotheses, H1 and H2 , and decide about α, β, and sample size before the experiment, based on subjective cost-benefit considerations. These define a rejection region for each hypothesis.

-

If the data falls into the rejection region of H1 , accept H2 ; otherwise accept H1 . Note that accepting a hypothesis does not mean that you believe in it, but only that you act as if it were true.

-

The usefulness of the procedure is limited among others to situations where you have a disjunction of hypotheses (e.g., either μ1 = 8 or μ2 = 10 is true) and where you can make meaningful cost-benefit trade-offs for choosing alpha and beta.

If one looks carefully at both the frameworks, one realizes that null ritual is a combination of two frameworks. The first step in the null ritual is from Fisher. The second step is adopted partially from Neyman-Pearson,i.e. there is no mention of thinking about errors, sample size, etc. The third step comes out of nowhere as it makes the entire exercise of null ritual a mechanical mindless exercise.

Feelings of guilt

The paper introduces Dr. Public-Perish. Before conducting an experiment he is in a dilemma. If he sets the significance level too high, then the actual result might be highly significant and he might end up understating the importance of his experiment. It goes the other way too. If he sets the significance level too low, then his experiment might show inconclusive results. So, to resolve this dilemma, he cheats a bit and reports the significance after the experiment. Dr. Public-Perish feels guilt about it. Dr. Publish-Perish does not know that his moral dilemma is caused by a mere confusion, introduced by textbook writers who failed to distinguish the three main interpretations of the level of significance.

-

Level of significance = mere convention

-

Level of significance = alpha

-

Level of significance = exact level of significance

For Fisher, the exact level of significance is a property of the data, that is, a relation between a body of data and a theory. For Neyman and Pearson, α is a property of the test, not of the data. In Fisher’s Design, if the result is significant, you reject the null; otherwise you do not draw any conclusion. The decision is asymmetric. In Neyman–Pearson theory, the decision is symmetric. Level of significance and α are not the same thing.

His superego demands that he specify the level of significance before the experiment. We now understand that his superego’s doctrine is part of the Neyman–Pearson theory. His ego personifies Fisher’s theory of calculating the exact level of significance from the data, conflated

with Fisher’s earlier idea of making a yes–no decision based on a conventional level of significance . The conflict between his superego and his ego is the source of his guilt feelings, but he does not know that. He just has a vague feeling of shame for doing something wrong.

Dr. Publish-Perish does not follow any of the three interpretations. Unknowingly, he tries to satisfy all of them, and ends up presenting an exact level of significance as if it were an alpha level, by rounding it up to one of the conventional levels of significance, p < 0.05, p < 0.01, or p < 0.001. The result is not α, nor an exact level of significance. It is the product of an unconscious conflict.

The author highlights a series of tests on null hypothesis testing, administered on Psychology students, professors & lecturers teaching statistics, professors & teachers not teaching statistics. The results show that the majority of people in all the three groups have grave misconceptions about NHST. The author also highlights a basic problem with journal editors who demand values to be present and hence are partly responsible for the null ritual.

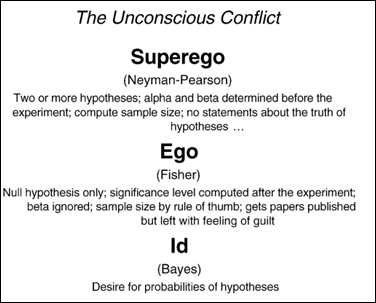

The Superego, the ego, and the id

The author is of the opinion that the reason for null ritual is not because of lack of intellectual reasoning, but more to do with social and emotional reasons. He gives a Freudian spin on the issue. The Neyman-Pearson theory serves as the superego of Dr. Publish-Perish. The Fisherian theory of null hypothesis testing functions as the ego. The Bayesian view forms the id. Its goal is a statement about the probabilities of hypotheses, which is censored by both the purist superego and the pragmatic ego.

Meehl’s conjecture , Feynman’s conjecture

The paper also mentions two conjectures that many people seem to disregard:

-

Meehl’s conjecture : In non experimental settings with large sample sizes, the probability of rejecting the null hypothesis of nil group differences in favor of a directional alternative is about 0.50.

-

Feynman’s conjecture:To report a significant result and reject the null in favor of an alternative hypothesis is meaningless unless the alternative hypothesis has been stated before the data was obtained.

How to stop this mindless ritual

The author concludes the paper saying,

To stop the ritual, we also need more guts and nerves. We need some pounds of courage to cease playing along in this embarrassing game. This may cause friction with editors and colleagues, but it will in the end help them to enter the dawn of statistical thinking.