Practical Docker with Python

Contents

This blog post summarizes the book titled “Practical Docker with Python”, by Sathyajith Bhat

Context

Docker has become the de-facto standard for packaging software in almost all kinds of usecases. AWS uses docker containers heavily across all its infrastructure. From a machine learning perspective, Docker skills have become almost imperative in order to work with modern ML platforms. Here are a few motivating examples to learn Docker, for a ML engineer:

- You have a PyTorch or a TensorFlow model that you want to train: One gets a complete control of the ML pipeline on AWS SageMaker by packaging all the model related files in a docker container.

- You have developed a model and you want to test the model locally before deploying it over SageMaker. The SDK offers a way to test your model locally by pulling the image in to a docker container running on your machine.

- You have built a model and want to expose it via AWS Lambda: AWS has a feature where you can package your code as a docker container and there is practically no limit for the size of code. This is very useful as the alternative options come with restriction on the code size that can be a part of Lambda function

- You want to share your model along with the dependencies with others in your team. The best way is to package it as an image, register it over some docker image registry platform and share the image.

- You have an application that has several dependencies and is working well on your local machine. You want to make the application available to a wider audience. One can use several options on AWS such as AWS Elastic Container Service, AWS Elastic Kubernetes Service, AWS Fargate. In order to utilize these services, one might have to learn the AWS UI/command line options. However the prerequisite skill to using any of the above platforms , is a good understanding of Docker concepts so that you can containerize the application and register your image in a private/public repository.

- Let’s say you have built an ML model that has to do batch inference. Of course you can do it one time by running a batch job. However if you need to do batch inference on a daily basis, one of the best ways is to utilize AWS Batch Transform. Most often than not, this route again requires Docker skills

Usually the educational resources on Docker are typically geared towards devops personnel. This book is different as it is geared towards educating a Python skilled programmer(i.e. pretty much everyone from a high school student to a serious ML researcher) with the details of Docker infrastructure.

In this post, I will try to list down some of the main points covered in all the chapters:

Containerization Primer

- Docker was founded as dotCloud in 2010 by Solomon Hykes. The team built

abstraction and tooling for Linux Containers and used Linux Kernel

features such as

cgroupsandnamespaceswith the intention of reducing complexity around using Linux containers. Ultimately dotCloud sold its entire framework to cloudControl, which later changed its name to Docker Inc. - Docker provides OS level virtualization known as containers, which is

different from hardware virtualization

- Uses

cgroups,namespacesandOverlayFSto run multiple applications that are isolated from one another, all within the same physical or virtual machine

- Uses

- Advantage of containerization: Package all the relevant software that can run on any platform. There is no need to prepare a machine for a new version of the application.

- Containerization through the years

- 1979:

chroot- A process initiated withinchrootcannot access files outside the assigned directory tree - 2000:

FreeBSD Jails- Expanded onchrootand added features that allowed for partitioning of theFree BSDsystem in to several independent, isolated systems calledjails - 2005:

OpenVZ- Providing low-end Virtual Private Server providers. It allowed for a physical server to run multiple isolated OS called containers - 2006:

cgroups- Started by Google engineers. It is a Linux Kernel feature that limits and isolates resource usage - 2008:

LXC- Provides OS-level virtualization by combining Linux Kernel’scgroupsand support for isolated namespaces to provide an isolated environment for applications

- 1979:

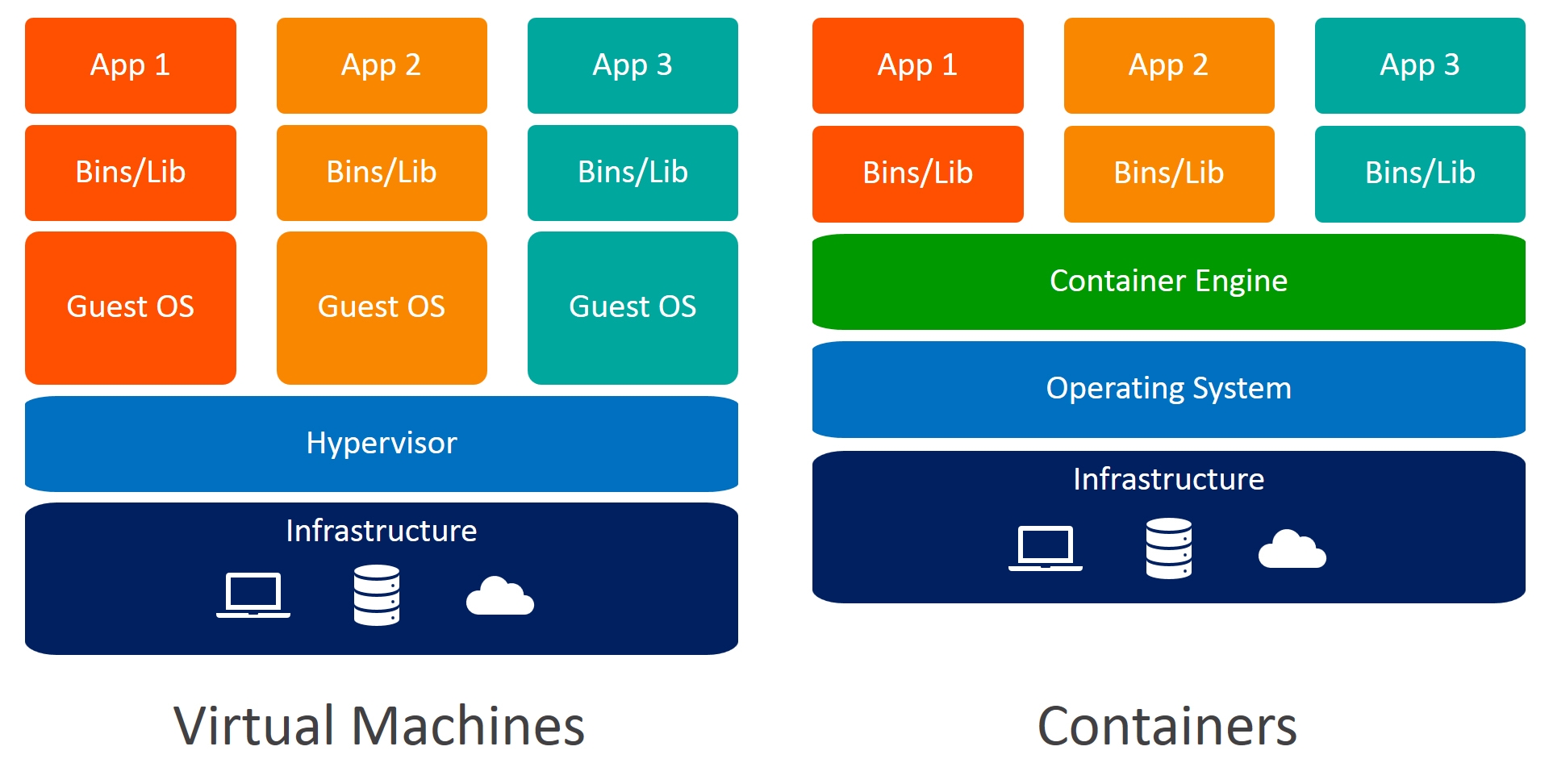

- Containers vs VMs

- Docker only isolates a single process and all the containers run on the same host system

- VM virtualizes the entire system - CPU, RAM and storage

Docker 101

This chapter covers all the relevant terminology that one encounters in the Docker world

- Layer is a modification applied to a Docker image

- Docker Image is a read-only template that forms the foundation of your application. It starts with a base image and we can build application stack by adding the packages

- Docker Container - Docker image, when its run in a host computer, spawns a process with its namespace, known as Docker Container

- Docker Volumes - Changes to the container layer are not persisted across container runs. To

overcome this problem, Docker Volumes was created. Docker provides different

ways to mount data into a container from the Docker host: volumes, bind mounts

and

tmpfsvolumes. The latter is stored in the host system’s memory only, whereas bind mounts and volumes are stored in the host filesystem - Docker Registry - Place where you can store Docker images so that they can be used as the basis of application stack

- Docker File - Set of instructions that tells Docker how to build an image

- FROM that tells Docker what the base image is

- ENV to pass an environment variable

- RUN to run some shell commands

- CMD or ENTRYPOINT that tells Docker which executable to run when a container is started

- Docker Engine - This is the core part of Docker. It provides the following

- Docker Daemon: Docker Daemon is a service that runs in the background of the host computer and handles all the heavy lifting of most of the Docker commands. The daemon listens for API requests for creating and managing Docker objects. It can also talk to other daemons for managing and monitoring Docker containers

- Docker CLI: Primary way to interact with Docker

docker builddocker pulldocker rundocker exec

- Docker API: It is an API for interacting with the Docker Engine. This is particularly useful if there;s need to create or manager containers from within applications

- Docker Compose - Tool for defining and running multi-container applications. The most common use case for Docker Compose is to run applications and their dependent services in a single container

- Docker Machine - Tool for installing Docker Engines on multiple virtual hosts and then managing the hosts

The final section of the chapter introduces the following docker commands and walks through the output of each of the commands

docker infodocker image lsdocker image inspect hello-worlddocker pull nginxdocker pull nginx:1.12-alpine-perldocker run -p 80:80 nginxdocker psdocker ps -adocker stop <container-id>docker kill <container-id>docker rm <container-id>docker rmi <image-id>

In any case, the docker --help gives the list of all relevant commands and

their description

|

|

Building the Python App

This chapter walks the reader through a sample app that can be used to dockerize an application. The sample app requires one to create a bot on Telegram app, run a server that responds to requests made to the bot.

Understanding the Docker file

- Dockerfile is an automated way to build your docker images

- BuildContext is a file or set of files available at a specific path or URL. The build context can be local or remote

- Dockerignore allows you to define files which are exempt from being

transferred during the build process. The ignore list is provided by a file

known as

.dockerignore - Common

DockerfilecommandsFROM: Tells the Docker Engine which base image to use for subsequent instructionsWORKDIR: This is to set the current working directory forRUN,CMD,ENTRYPOINT,COPYandADD. It can be set multiple times in a Dockerfile, If a relative directory succeeds a previousWORKDIRinstruction, it will be relative to the previously set working directoryCOPY: Supports basic copying of files to the containerADD: Additional features like tarball auto extraction and URL supportRUN: Execute commands in a new layer on the top of the current image and create a new layer that is available for the next steps. Two forms are used:- Shell form

- Exec form

CMD: sets default command and/or parameters, which can be overwritten from command line when docker container runs.ENTRYPOINT: command and parameters will not be overwritten from command line. Instead, all command line arguments will be added afterENTRYPOINTparameters.ENV: Sets the environment variables to the imageVOLUME: Tells docker to create a directory on the Docker host and point to the named directory, within the container to the host directoryEXPOSE: Tells dockers that the container lists for the specified network ports at run timeLABEL: Useful to add metadata to the images

- Guidelines and recommendations for writing Docker files

- Containers should be ephemeral, i.e. we must be able to stop, destroy and restart with minimal setup

- Keep the build context minimal

- Use multi-stage builds

- Skip unwanted packages

- Minimize the number of layers

- Multi-staged build: A single Dockerfile can be leveraged for build and deploy images.

The chapter has a few exercises at the end, that will reinforce all the

Dockerfile concepts explained by the author. One of the exercises has a typo

in the book, but is corrected in the github repo

Understanding Docker Volumes

Containers are usually stateless and they are invoked to do a certain microservice task. If you really want the microservice to save state, one usually goes in for a permanent database. However there are usecases where one would want the docker container to persist data. More so, in the development phase, it makes sense to map the directory in to container so that one need not rebuild the images for changes in the code. Docker offers the following strategies to persist the data:

tmpfs mounts

These mounts are best for containers that generate data that doesn’t need to be persisted and doesn’t have to be written to the container’s writable layer.

bind mounts

This is case where you can map a specific host directory to the container directory. All the changes in the host directory are automatically mapped to the container directory and vice-versa. Since the data flows both ways, it is suggested that you make the bind mount a read only mount. This way any rougue command fired in the container will have no impact on host system

Volumes

Docker volumes are the recommended way to persist data. In this case, docker manages the stored data. This option has several advantages over bind mounts:

- Easy to backup or transfer

- Works on both Linux and Windows

- Can be shared across multiple containers

Docker creates a volume /var/lib/docker/volumes/ and managed the state mapping

of the files between host and container directories

|

|

Understanding Docker Networks

Containers facilitate micro-services architecture implementation. It is obvious that these micro-services need to communicate with each other and hence docker comes with several networking options. Docker’s networking subsystem is implemented by pluggable drives. Docker comes with four drivers out of the box and these are

- bridge

- host

- overlay

- macvlan

- none

Bridge Network

A bridge network is a user-defined network that allows for all containers connected on the same network to communicate. The benefit is that the containers on the same bridge network are able to connect, discover, and talk to each other, while those not on the same bridge cannot communicate directly with each other.These are useful when we have container running on the same host that need to talk to each other. When docker is installed and started, a default bridge network is created and newly started containers connect to it.

Host Network

In this scenario, a container is attached to the docker host. Any traffic coming to the host is routed to the container. Since all of the container ports are directly attached to the host, there is no need to specify any container port mapping. This is useful if there is only one container running on Docker host.

Overlay Network

This helps in creating a network spanning multiple docker hosts. It is called an overlay because it overlays the existing host network, allowing containers connected to the overlay network to communicate across multiple hosts.

None Networking

In this mode, the container are not connected to any network interface.

The relevant commands are

|

|

Understanding Docker Compose

DockerCompose is a tool for running multi-container applications bringing up

various linked, dependent containers. Docker makes it easy to map each

microservice to a container. Docker Compose makes orchestration of all of these

containers very easy. Without Docker Compose, our container orchestratoin steps

would involve building the various images, creating the required images,

creating the required networks, and then running the application by a series of

Docker run commands in a specific order. As and when the number of container

increase, these steps become very tedious.

DockerCompose helps one to bring all containers with a single command that

feeds off from a YAML file.

Docker compose file is a YAML file that defines the services, networks,

and volumes that are required for the application to be started. Docker expects

the compose file to be present in the same path into whch the docker-compose

command is invoked having a file name of docker-compose.yaml

The following is a sample docker compose file that is meant to orchestrate the

creation of database server, webserver and a caching server.

|

|

Quick reference

The following are some of the common commands used in the Docker compose file

|

|

The associated commands with docker-compose are

|

|

Takeaway

Docker skills have become absolutely important for any data engineer/ data scientist/ ML engineer. Gone are the days when models could be trained on a laptop or a custom GPU. Very likely one might have to spin up a cluster in all the three phases of ML - Build, Train and Deploy. This book offers a gentle introduction to the Docker world. The concepts mentioned in this chapter are enough to get you going on containerizing ML app and using any of the existing cloud providers to train and deploy the model. The fact that it has a running Python example through out the book makes it easy for a wider audience to understand docker concepts.