Bumping

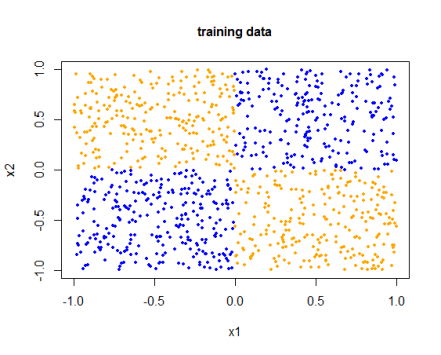

Classification trees fail miserably in some cases and in such situations, bumping might be a good method. A stylized example of bumping is as follows : Imagine that there are two covariates x1 and x2 and the true class labels dependend on XORing the two covariates. The orange labels represent one class and blue labels represent another class.

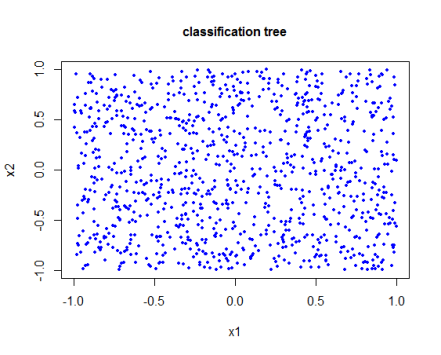

If you run any sort of plain vanilla classification algorithm that does greedy binary splits, the algo will fail. For example if you run a classification tree on this, the results would look something like this (almost all the observations get assigned to a specific class) :

The reason for the failure is that recursive split, by considering ONLY one of the two variables is going to fail miserably as class labels are dependent on XORed data.

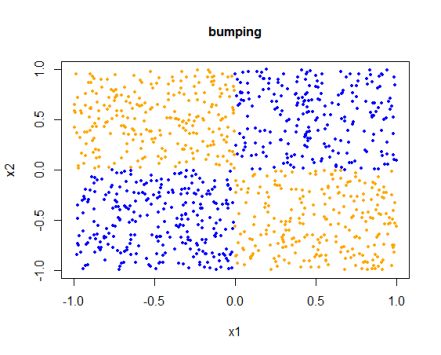

A nifty search called ‘Bumping’ solves this problem. The basic idea is that you create bootstrap sample, fit a classification tree, predict the class at each of training data set of covariates and assign the observation to a class for which the bootstrapped results vote the most. By merely doing bootstrap 20 times, you get a reasonably good classification.