Matrix Algebra : Theory, Computations, and Applications in Statistics

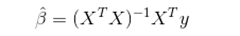

We often come across mathematical expressions represented via matrices and assume that numerical calculations exactly happen the way expressions appear. Let’s take for example

These are the well known “normal equations” to compute regression coefficients. One might look at this expression and conclude that the code that computes beta inverts the Gramian matrix XTX and then multiplies the inverse with XTy. Totally false. Why? The condition number of the Gramian matrix XTX equals square of the condition number of X. The higher the condition number of the matrix, the more numerically unstable is the solution.This is the recurrent theme of the book by James E. Gentle.

The form of a mathematical expression and the way the expression should be evaluated in actual practice may be quite different

The book is a magnum opus (~ 500 pages) on linear algebra. It is divided in to three parts. The first part deals with the theoretical aspects of matrices. The second part highlights various applications where matrices become almost your best friends. The third part of the book deals with numerical linear algebra.

At some point in one’s learning path, every stats guy needs to know about the basic difference between Real numbers in mathematics and the floating point numbers (numbers that are stored in a computer). Simple mathematical laws like associativity (a+b)+c = a +(b+c) do not hold good in the space of floating point numbers. In fact the set of floating point numbers in a computer is a complicated finite mathematical structure. Basic mathematical laws do not hold true. As statisticians or practitioners, one need not know in great depth about such things but I guess one must have a passing familiarity with these principles. I mean you can easily spend at least 3 to 4 months understanding every aspect of what an SVD implementation entails. But it is not necessary for everyone to get in to such a level of detail. In that sense, an end user can speed read the last part of the book because the technology to do all the complicated stuff is mature and is available in MATLAB, R, Octave, Python etc. However if you slow down and read through the content, you will develop a ton of appreciation to the software that helps you do SVD/Eigen/QR factorizations using one line of R / Python / MATLAB code.

The first two parts of the book bring out a ton of amazing things about Matrices. There are close to 650 equations in the entire book , all of which are explained with out using words such as theorems, lemmas, etc. At the same time, I don’t think there is even a single equation where the rationale behind it is swept under the carpet.

If you read this book cover to cover in absolute rapt attention, my guess is, you will start noticing matrices, classes of matrices and matrix operations EVERYWHERE, in whatever applied work you do. You can’t help but notice them. That’s the beauty of this book.